What if the AI agent makes a mistake? Designing for safe failure

AI agents are getting smarter by the day.

They plan, decide, act and increasingly operate in real business processes.

But as teams adopt agents for tasks like lead qualification, email replies or internal automation, one question keeps coming up:

What if the AI agent makes a mistake?

And frankly, it’s the right question to ask.

Mistakes will happen. The point is: then what?

No matter how well an AI agent is trained or configured, it will occasionally get things wrong.

It might:

-

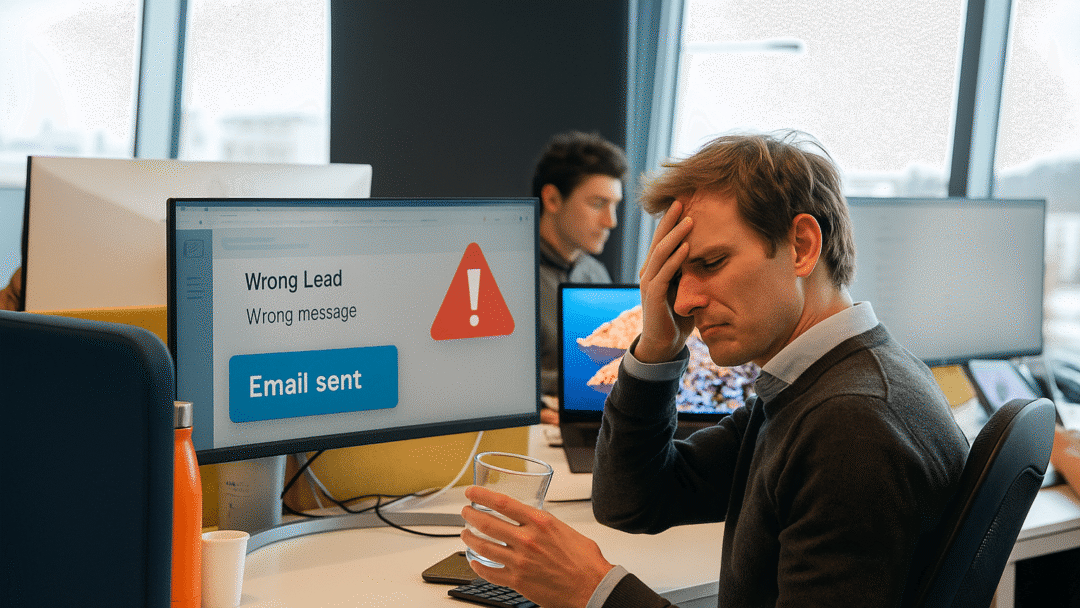

Pull the wrong contact from your CRM

-

Misinterpret context

-

Act at the wrong moment

In human terms: it sends an email to the wrong lead, with the wrong message.

The solution isn’t to stop automation altogether.

The solution is to design for safe failure.

- Pre-checks before action

- Human-in-the-loop for critical steps

- Logging and alerts

- Pattern recognition and learning

Designing for safe failure done right

Whether it’s due to incomplete data, system delays or unexpected user input. the risk of failure is real.

What matters most isn’t preventing every mistake, but designing the system to handle failure safely, transparently and recoverably.

Pre-checks before action

Prevention is still the best cure. Before an agent takes action, we build in checks to validate the context: Is the required data present? Does the company name match the contact record? Are key fields filled out and verified? These small checks prevent big errors. Especially in outbound communication or transaction-related tasks.

Human-in-the-loop for critical steps

Some actions are too sensitive to automate fully. For tasks like sending external emails, confirming orders or initiating a contract, we design agents that pause. They generate the action, but wait for human approval before executing it. This preserves speed without compromising control.

Logging and alerts

Not every issue can be prevented. That’s why observability matters. Every action an agent takes is logged, anomalies are flagged and the right person is alerted immediately. This is a crucial step when you're building a system that needs to be trustworthy.

Pattern recognition and learning

Good agents don’t just act. They improve. When something goes wrong, we design our agents to spot it, learn from it and adjust. Sometimes that means adjusting the flow, changing how prompts are structured or surfacing new fallback logic. The key is that agents don't just react but they also adapt.

Safe failure = good design

Perfect agents simply don't exist. We believe in reliable, accountable and transparent ones.

Because in real-world systems, trust doesn’t come from flawless execution.

It comes from knowing what happens when something breaks and having a system designed for that.

Book a meeting

Discuss your idea directly with one of our experts. Pick a slot that fits your schedule!